Why AI agents keep disappointing us

And what LLM observability and evaluation tools should look like if we want better assistants

Every week there are startups launching AI agents, for FP&A, travel booking, customer support, etc. Sometimes someone claims to have built the ultimate AI assistant.

Per AWS, AI agents are ”autonomous intelligent systems performing specific tasks without human intervention.” Given this definition, we expect agents to replicate what we do in our CRMs, sales outbounding tools, and other web-based tools. But benchmarks still show there’s a huge gap between AGI expectations and agentic reality. For example, Anthropic’s hot new computer use feature is currently at 14.9% accuracy on the screenshot-based OSWorld benchmark.

How evaluations are broken

In my own experience, the most difficult part of using agents has been guiding them. For example, executive assistant agents never get things 100% correct on the first try. I have some rules for scheduling meetings — external coffees on Friday afternoons, which can be shifted to Thursday if my direct reports need to schedule something overlapping. I’d only tell this preference once to a human EA, but with an AI agent I need to deal with an annoying series of back and forth.

The problem is that agents still rely on 2000’s style CSAT feedback. Rate what the agent did with a thumbs up or thumbs down. Sometimes there’s a “Tell us more” text field, or a way to chat with the agent and have it acknowledge its mistakes. Imagine if those were the only ways you could communicate with a human assistant… If we expect human-like results from agents, we need to give them higher quality feedback.

A new design proposal

The most powerful types of feedback are direct revisions to work. We improve our writing by having our work edited, and seeing the difference between our first draft and the tweaks our editor made. Whether it’s on physical paper or on Google Docs, we learn more quickly by seeing edits superimposed on the original.

Our business apps — think Google Calendar, Salesforce, Hubspot, ServiceNow, SAP, etc — should also have version control for humans and agents to better collaborate.

I was inspired by this idea, and GitHub code diffs, to create a conceptual design with the goal of a) providing greater visibility into agents’ actions and b) creating a more native, hi-fi feedback tool. I’ve applied this to Google Calendar first, and will eventually explore other business applications like CRMs and ERPs.

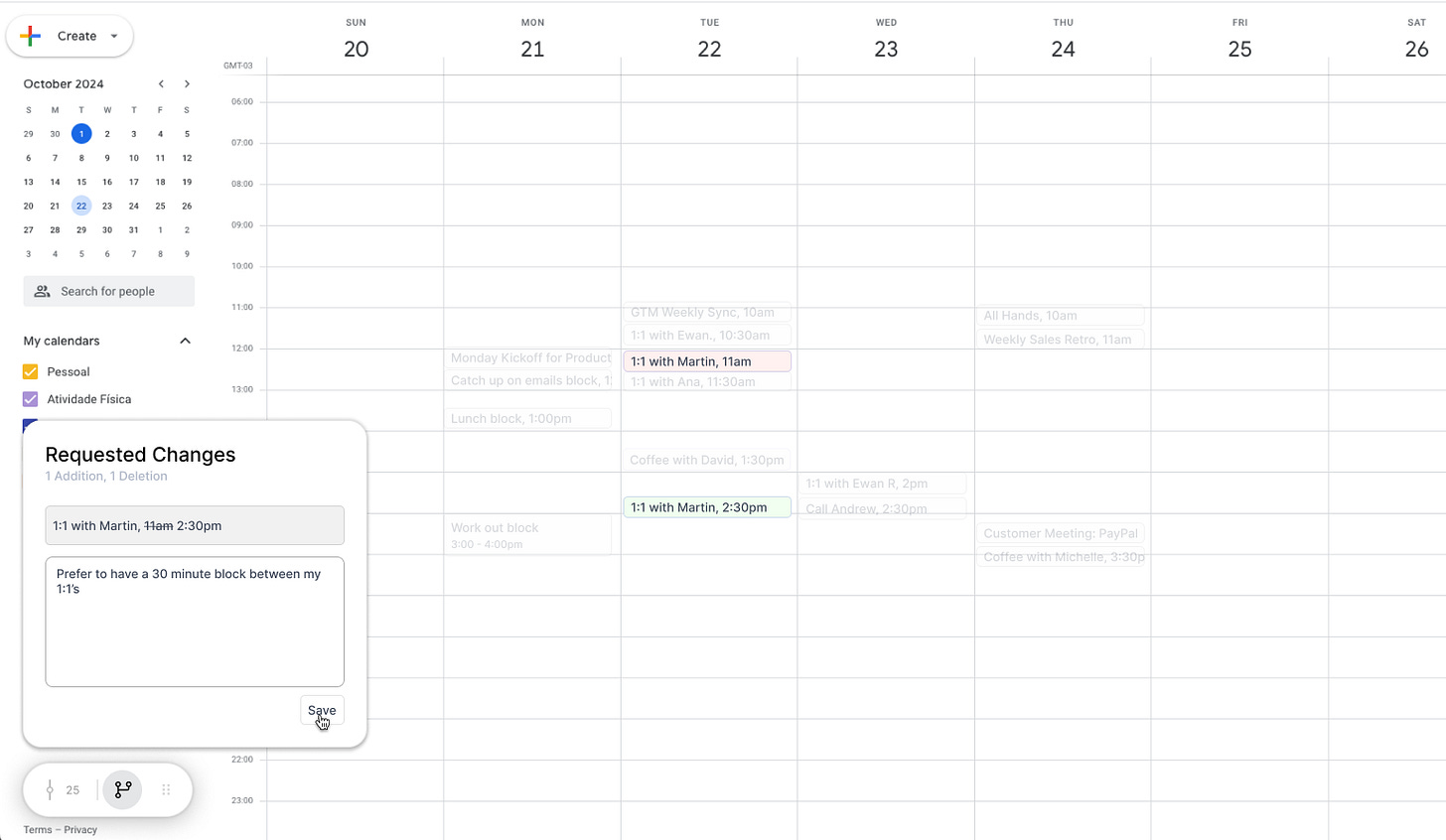

Below, you’ll see this “task log” widget can sit atop any interface (see bottom left).With it, human users can trace agents’ actions and make edits to agents’ work.

When you click in, the main view is a list of version snapshots.

Click into a specific snapshot to see what actions the agent took on your calendar.

You’ll notice there’s a Give Feedback button on the snapshot. When pressed, you then enter Feedback mode, which allows you to update, change, or delete the events, thereby giving the agent the correct path.

Once the edit is made, you’ll see that this is represented as a revision in the action log.

With this agent task log system, we can build stronger evaluation loops and ultimately more reliable agents. The specific UX for providing feedback on agent tasks will vary widely for different types of business apps, but I hope this initial exploration gives you some ideas if you’re also exploring new UX paradigms for agents.

Limitations

The design above might work for AI agents focused on calendar actions, but these patterns can’t be easily extended to other types of apps. Further exploration is needed for more complex software. Some additional considerations are needed for apps with:

Work that happens across multiple pages

Multi-hour delays between steps

Multiple data objects in play, e.g., company, deal, person objects in CRMs

The future of agents

With all their magic wand and sparkle emojis, we expect agents to fulfill our dreams. But we’re still far from that due to our existing agent infrastructure. We need to drastically improve LLM observability and evaluation to build agents that self-improve over time. This can only happen via:

A version control system to observe agent actions in any app

Evaluation tools for humans to give higher fidelity feedback to agents

I hope this initial exploration gives you some ideas for where LLM evaluation design should go. If you’re building agents or thinking about the space, please reach out! I’ll be pushing some additional pieces and would love to collaborate with others.

Many thanks to my colleague Filipe for his collaboration on this piece.

As a person interested in AI agents and UX design, this writing was very enlightening. Thanks for sharing your work

Interesting idea, Sara! Curious, how would the agent incorporate the feedback? I’m guessing it could be added to the prompt (but context length could be an issue) or training a new model (which might be too resource intensive)? I could be missing something obvious.

I recently wrote about quite a different idea, where agents should not show their steps because it overwhelms the user, especially when there are too many steps. And ideally, we shouldn’t need to monitor our agents (thought reliability is still an issue for now). https://letters.alfredlua.com/p/alfred-intelligence-6-ai-agents-should